It seems like batch processing should have no place in agile development. It conjures up the image of someone sitting at a IBM 026 keypunch machine typing in Job Control Language (JCL) and COBOL II. It is doubtful that any agile team has ever experienced this. In any case, that is not what is being counted here.

Batch processes are non-functional processes that do not cause information to cross the application boundary. A typical example of that would be some type of end of month processing. For example, there might be a program that changes the running totals that are maintained in a file. There might be 12 running totals for the past 12 months. The program might discard the oldest total and move the other totals between the 11 months that remain. Then, a new total might be placed in the 12th bucket. Nothing has crossed the application boundary. The program runs because it is scheduled to run at the end of the month. Likewise, it does not issue any kind of user report. End of day, week, month, etc. are not the only possible batch processes. Programs that load information into files may also be batch processes. This is only the case if they have not been counted as conversion functionality.

Sometimes, it is easier to identify batch processes by describing what they are not. Batch processes are not functional. Therefore, anything that is functional is not counted here. If data is transferred to or from an external application, then it is probably functional unless it is updating a code table. Anything reading screens or generating reports are probably functional. In any case, they are not batch processes. When data in an internal logical file (ILF) is updated as part of an enhancement, it is usually considered conversion functionality. For example, if a new field is added to an ILF to contain someone’s birthday, then part of the project would be the conversion job to fill the birthday fields of existing records. What if there were a one-time job to update birthdays from another source. If the birthday field were not new, then the process would not be conversion. However, many practitioners have counted these one-time jobs as conversions. If so, then they cannot be counted as batch processes.

Not all non-functional processes are batch processes. Anything that is designed to be performance enhancing is not considered to be a batch process. Changes to the data in code tables are non-functional, but they are not batch processes. These processes are covered by other non-functional categories. Any batch processes that have not been counted by one of these other functional or non-functional processes can be considered a batch process.

How do you group batch processes? This is where your JCL experience would be helpful. Batch processing was broken into jobs. Jobs were broken into steps. For estimating purposes, consider the job level. All of the steps that occur for that job would be considered part of the batch process.

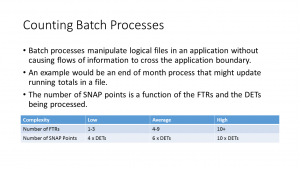

When calculating the SNAP points for a batch process, first count up the number of File Types Referenced (FTR). If a batch process must read from a customer file, then the FTR number is one. If multiple steps of the batch process read from different files, then the FTR number is the sum of the unique files. In other words, if two steps read from the customer file, the FTR is still one. If one step reads from the customer file and the next writes to the order file, then the FTR number is two. The number of FTRs is used to establish the complexity, as shown in the table at the top of this post. If there is three or less FTRs, there is low complexity; five to nine FTRs, average complexity; 10 or more means high complexity.

The SNAP count is a function of the complexity and the number of Data Element Types. If the complexity is low, the SNAP count is four times the number of DETs; average, 6 times; high, 10 times. This is a time where the DET count must be arrived at with care. Many times in sizing, the DET count becomes part of a range. For example, when arriving at a function point count for an External Input, any DET count over 15 becomes high complexity. It does not matter whether the count is 16 or 60. The situation is different with the SNAP count for a batch process. If an average complexity batch process works with 16 DETs, the SNAP count is 96; for 60 it would be 360. Therefore, an accurate count or estimate of the DETs is necessary. Many times estimators use the number of fields as a DET count. That is not true. Using the example that was mentioned earlier, suppose that the batch process was updating 12 rolling totals. This is one DET, not 12. The repeating groups are all the same DET. There are some borderline cases. Is the date one DET or 3 (month, day and year)? It depends upon whether the different date components are used differently. The estimator should use their judgement.

Here is our example: if there is an end of month process that discards the oldest running total, shifts each of the 11 running totals down one, and puts a new running total in the last bucket, then it is a batch process. Since it only interacts with a single running total file, its FTR is one and the batch process has low complexity. Since it has low complexity and a single DET, the SNAP count is 4 (4 x 1).

The one question on people’s minds should be, “How can I estimate the number of DETs early in the life cycle?” A better question might be, “Can I identify the batch processes early in the life cycle?” The bad news is that you can not. These are the types of things that are identified in the design phase. Did I get your attention? There is no design phase in an agile development effort. There are no analysts, designers or testers. There is only development being done by developers. Developers are clarifying requirements from the user stories during the iteration where they are being implemented. Developers are designing the simplest solutions that work during each iteration and then refactoring those designs when it makes sense to do so. However, all of this development is done during iterations (sprints). The need for batch processes is usually uncovered during later iterations. It is usually after files have been put into place and the need for maintaining different types of data have been uncovered. The good news is that the batch processes do not impact estimates early in the life cycle as much as later. Early iterations are often dominated by additions to functionality. Later iterations and enhancements may be dominated by changes to non-functional aspects of the system.

There are other reasons to identify batch processes. Suppose there is a sprint where it is anticipated that 5 user stories will be implemented. While working on the stories, the need for some bath processes arises. Implementing those processes will probably result in a story or two not being implemented. This is the result in the expansion of software size, not a drop in velocity. This has to be understood so as not to underestimate velocity on future iterations.

People who are using estimating models like COCOMO II, SEER or SLIM also have to capture the programming language or languages being used to implement the batch process. If there are multiple languages, then they must be apportioned. For example, if 50% of the batch process is written in JavaScript and the other 50% is in Perl, then this should be captured.

Leave a Reply